Forget sleek gadgets and flashy press conferences – Apple’s latest innovation might not come wrapped in a shiny box, but it packs a powerful punch nonetheless. The tech giant recently unveiled “MM1: Methods, Analysis & Insights from Multimodal LLM Pre-training“, a research paper outlining a groundbreaking approach to artificial intelligence (AI).

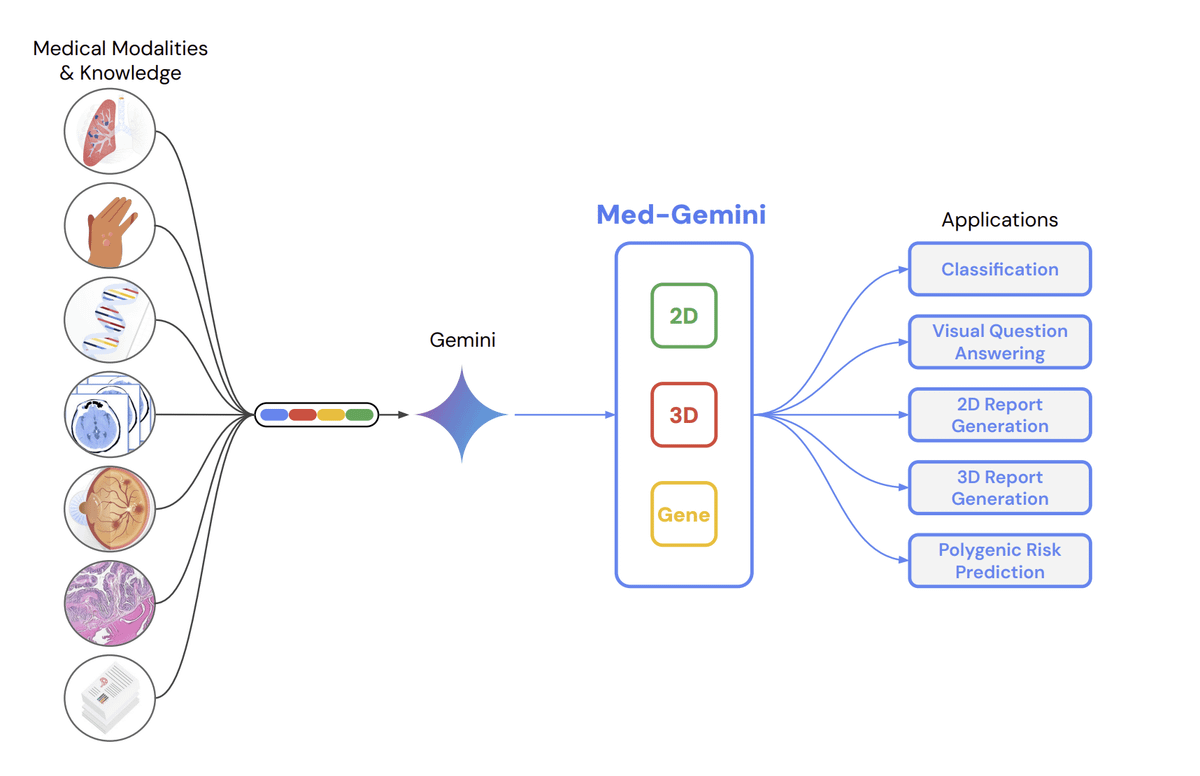

Here’s why this seemingly dry research has the potential to be a game-changer: MM1 delves into the world of Multimodal Large Language Models (MLLMs). Unlike traditional LLMs that thrive on text and code, MM1 focuses on training AI models to understand and process both text and images simultaneously.

Think of it this way: current AI assistants like Siri can understand your spoken words, but struggle to grasp the context of a photo you show them. MM1 bridges that gap. By training on a combination of text descriptions, actual images, and even text-image pairings, MM1 unlocks a whole new level of AI comprehension.

The implications are exciting. Imagine a future where your iPhone camera doesn’t just capture memories, but becomes an intelligent tool. With MM1, your virtual assistant could translate a foreign restaurant menu displayed on your screen, or automatically generate witty captions for your travel photos.

The possibilities extend beyond simple image recognition. MM1 has shown success in tasks like visual question answering, allowing it to analyze an image and answer your questions based on the combined visual and textual data. This opens doors for smarter search functionalities or educational tools that seamlessly blend text and image-based learning.

While the paper itself focuses on the research side, it’s clear Apple is laying the groundwork for future product integration. MM1’s success could pave the way for a wave of innovative features across Apple’s devices and software, fundamentally reshaping how we interact with technology.

So, while MM1 itself isn’t a product launch, it signifies a crucial step forward for Apple’s AI ambitions. This research paves the way for a future where our devices not only understand our words, but also see the world through our eyes, ushering in a new era of intuitive and intelligent human-machine interaction.

Leave A Comment