Imagine an AI that doesn’t just see, hear, or read, but understands the world by seamlessly weaving together these different senses. This is the promise of Multimodal AI, a groundbreaking approach that breaks free from single-data limitations and unlocks a deeper level of intelligence.

1. What is Multimodal AI?

Think of Multimodal AI as an orchestra conductor, bringing together various instruments (data types) like text, images, audio, and sensor readings to create a harmonious symphony of understanding. Unlike traditional AI models trained on a single type of data, Multimodal AI goes beyond the surface, recognizing the rich connections and context hidden within diverse information streams.

This holistic approach empowers AI to:

- Make more accurate predictions: By considering multiple perspectives, Multimodal AI can paint a more complete picture, leading to more informed decisions and insights.

- Gain deeper understanding: The interplay between different data types reveals hidden patterns and nuances, uncovering deeper truths than any single piece of information could.

- Create more natural interactions: With the ability to process the world like humans do (through multiple senses), Multimodal AI fosters more intuitive and engaging interactions between humans and machines.

2. How does Multimodal AI work?

Imagine a well-rehearsed dance:

- Individual Virtuosos: Specialized AI modules, each trained on a specific data type, analyze their assigned input, extracting key features and information.

- The Fusion Waltz: Different pieces of information from various modalities are brought together. This can happen in different ways, like merging text descriptions with image features or combining insights from speech and facial expressions.

- A Grand Finale: Based on the fused understanding, the AI system generates an output that leverages the strengths of different modalities. This could be a text response enriched with insights from images, an image generated based on a textual description, or even an action in the real world like a self-driving car adjusting its course based on combined sensor data.

However, this dance isn’t without its challenges. Training these models to effectively translate and connect information across diverse modalities requires sophisticated techniques and vast amounts of labeled data. Additionally, understanding how these models arrive at their conclusions can be tricky due to their complex inner workings.

Examples of Multimodal AI in Action

The potential of Multimodal AI extends far beyond theoretical discussions. Here are a few real-world examples showcasing its transformative power:

- RunwayML: Imagine generating stunning videos simply by describing them with text! This platform empowers anyone to become a content creator, pushing the boundaries of video production.

- Otter.ai: This AI meeting assistant acts as your virtual notetaker, transcribing conversations, capturing key points, and analyzing sentiment, turning meetings into goldmines of actionable insights.

- Embodied Moxie: This social robot uses a combination of speech recognition, facial recognition, and emotional understanding to interact with children and teach them valuable social-emotional skills in a playful and engaging way.

- OpenAI Codex: This revolutionary tool translates natural language descriptions into code, opening doors for non-programmers to create software applications and redefine the future of human-computer collaboration.

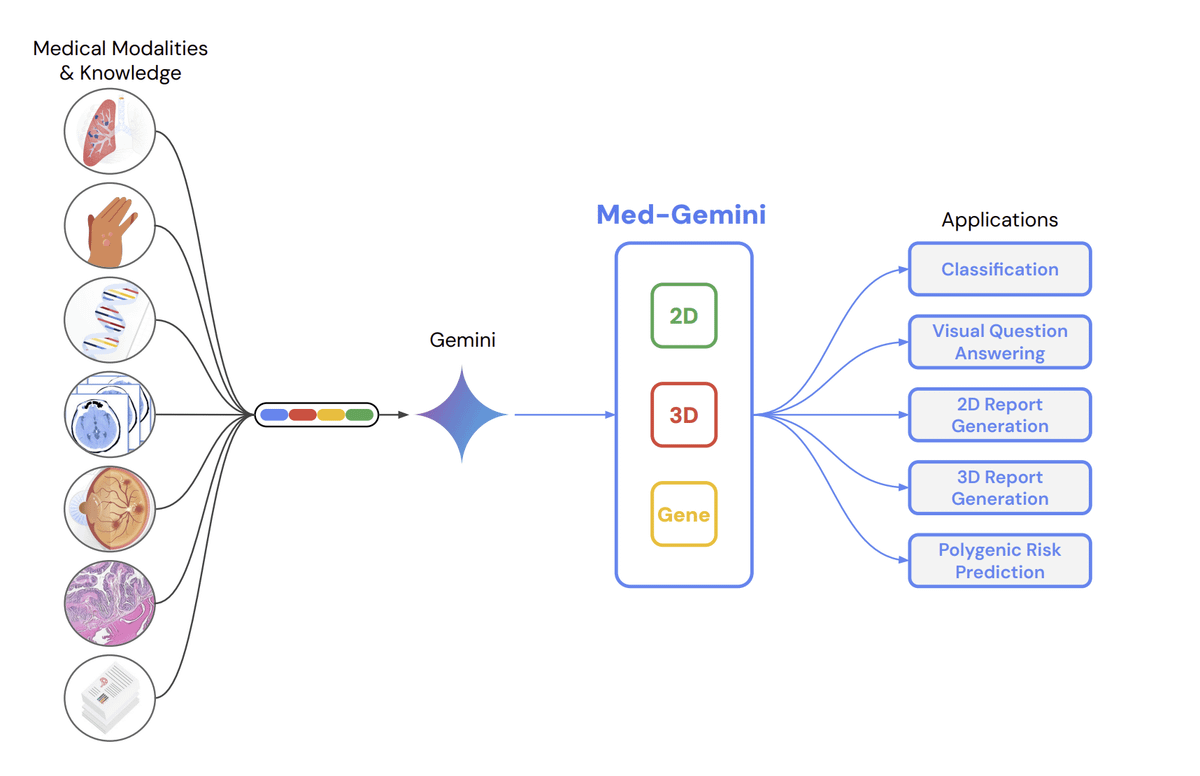

These are just a glimpse into the vast potential of Multimodal AI. As research and development continue to accelerate, we can expect even more exciting applications in healthcare, education, customer service, and countless other fields. The future is multisensory, and Multimodal AI is poised to lead the way, transforming how we interact with technology and understand the world around us.

So, the next time you see an AI interacting with the world in a nuanced and seemingly “human-like” way, remember, it’s not just magic, it’s the power of Multimodal AI at work!

Leave A Comment